Database Migration and Slotting

Database Slotting Strategies

As many organizations are in the process of upgrading and migrating their databases, we as DBAs need to make sure the database has an ideal target environment. The target database environment needs to be able to support the new database(s) for the supported applications while not impacting or adding risk to the other databases on the same SQL Server instance. In this article, we will discuss what it takes to create a database slotting strategy for your SQL Server environment that can be automated so as your organization scales and shifts towards offering Database as a Service, you are only involved if you want to be.

Before we give you the formula for determining which server to automatically slot a new database, in order to support your company’s mission-critical accounting application, we need to cover some basics.

Based on my experience, there are many different types of SQL Server architectures out there, but they all have some things in common.

When we design new SQL Server environments for organizations, there are 4 basic concepts that apply to any environment regardless of the version, database platform, cloud or on-premise.

- Tier of Service – Reflects the business criticality of the environment.

- Will this environment support the most important databases in the company or is the database designed to support a line of business application? Typically, we see Tier 1 – Tier 3 and non-production but have seen up to 10 Tiers of service but to us, that is overkill and very confusing to users.

- Environment Type – Classification for what the system’s use case is.

- Is this a production database or a non-production database for UAT? Based on the environment, there are typically different service level agreements (SLAs) or objectives (SLOs) that influence all the alerting, maintenance, monitoring, and other processes around the environment. And based on this, we typically do not recommend slotting databases with a non-production classification on a production system.

- Environment Size – Determines the number of resources assigned to the server.

- How many resources will the database or application need to support the workload today and over the next year? This is an important question and a tough one as how you determine the number of resources a database will need now let alone in one year.

- Service Offering – Determines if the server is dedicated to a single database/application or shared among many databases/applications.

- There are certain applications that require isolation because of regulatory or security considerations or maybe they will be scaling to 10X any other applications and they need dedicated resources. To accomplish this and have a service offering for them, we need to offer a dedicated server. The other type of service offering is a common or shared service offering. This is the most common approach and is often a better long-term strategy because if your environment has hundreds or thousands of databases, you’re licensing and support costs will be lower.

Now that we have the basics out of the way, we need to determine what information is required about a database before we can identify the ideal SQL Server instance’s location for the new home for the database. And think about it, most likely we are identifying 4 SQL Server instances and not 1.

The reason is most environments have Development, Quality Assurance (QA), User Acceptance testing (UAT), and Production environments, and we as DBAs always recommend having a non-production copy of the database. Right? Because this is where we should be catching the bad code and design patterns before they are promoted to production.

Separate from the 4 questions above that we need answers to, we also need to know the capacity required by the new database and of the SQL Server instances in order to identify which servers have enough capacity or excess resources to support the new database.

This is where this can be challenging depending on what tools and systems you have within your environment because if you are like me, the existing SQL Server tools are great at showing a lot of performance data, but they are not effective at answering the following question. “Can this SQL Server instance support a database that needs equivalent or 4 vCPUs and 22GB of memory?”.

The way we determine the capacity requirements of an existing database for CPU, memory, and storage is more straightforward. Below is an image that shows the resource usage by a database on a SQL Server instance.

| Database | Database Size | Memory Usage | % IO | % CPU | % Memory |

|---|---|---|---|---|---|

| MainDB | 1.5 TB | 17.1 GB | 18.29 | 68.75 | 9.39 |

| RiskDB | 452 GB | 103.2 GB | 10.71 | 10.64 | 56.78 |

| PricingDB | 419.5 GB | 4.3 GB | 7.71 | 10.09 | 2.37 |

| DBAUtility | 52.1 GB | 220 MB | 0 | 6.78 | 0.12 |

| ActivityDB | 4.8 TB | 53.3 GB | 16.43 | 3.75 | 29.31 |

| ReportingDB | 4.6 GB | 8 MB | 0 | 0 | 0 |

| HistoryDB | 1.6 GB | 3 MB | 0 | 0 | 0 |

| ServiceDB | 767 MB | 3 MB | 0 | 0 | 0 |

| Master | 320 MB | 3 MB | 0 | 0 | 0 |

| model | 256 MB | 1 MB | 0 | 0 | 0 |

| msdb | 612 MB | 17 MB | 0 | 0 | 0.01 |

| tempdb | 677 GB | 3.7 GB | 43.14 | 0 | 2.01 |

From this data, you can determine the number of resources needed for storage and memory as the number above shows today’s data cache size. Initially determine the compute, you will need to perform some simple math. The % CPU is the amount of CPU you are using relative to the other databases. To determine the number of CPUs you need, review the AVG and MAX or Process\% Processor Time for the sqlservr.exe.

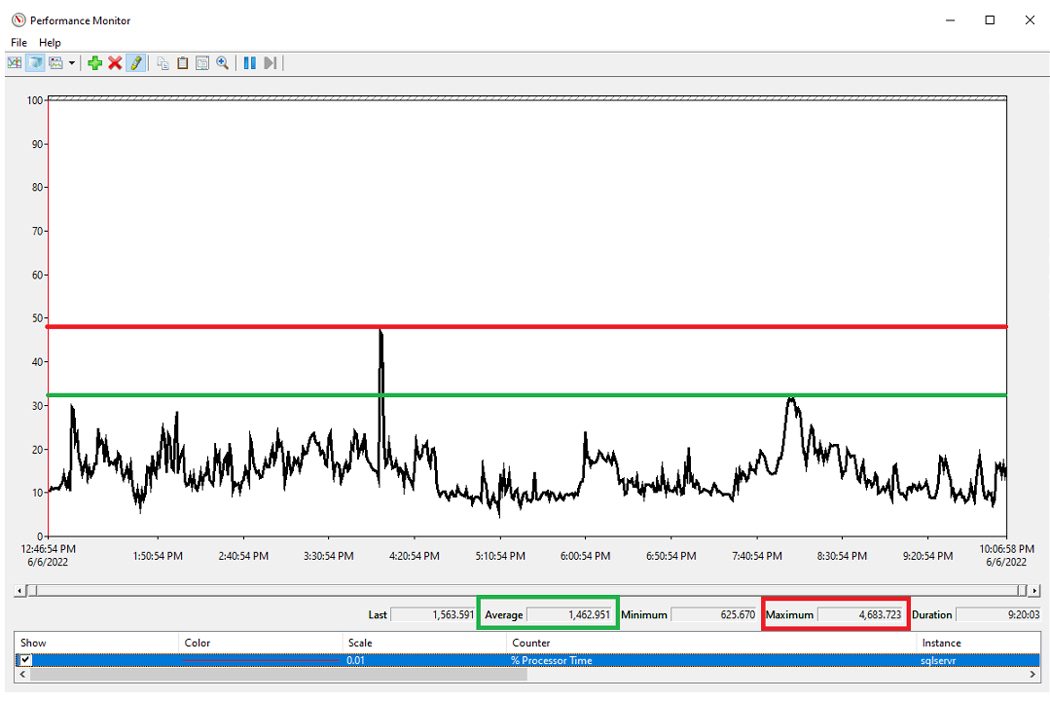

Note that the value for the sqlservr.exe process needs to be divided by 100 to determine the number of vCPUs in use by the process. In this example, the Max CPU usage is ~46 vCPUs and the Main database uses ~69% which equates to 32 vCPUs. There is an important decision here as the spike is one time and might be maintenance or another database on the server, so before committing to 32 vCPUs, I would perform some research.

The image below shows the Performance Monitor with the CPU usage for the SQL Server process.

We have the resource requirements for the MainDB database so now we need to slot onto a new server, but which server has enough capacity to support 32 vCPUs, ~1.6TB of data, and 18GB of data cache? This is where you will need to review your database monitoring tools to determine which SQL Server instances have enough excess CPU, memory, and storage capacity to support the Main database. An important factor to take into consideration with Tier 1 databases with higher data growth and transactional volumes is they will consume more resources over time, so they need to monitor and managed to ensure the capacity is optimal long term.

So we know how to slot an existing database where there is historical data but how do we slot a new database with no historical data? Realistically, only one part of the process changes in the equation, which is determining how many resources the database requires over the next year. To get this data, we would ask the following questions in addition to the 4 questions at the beginning of this article:

- What are the 1-year data storage requirements?

- How many users will be using the application/database?

- Is the database a transactional, reporting, or hybrid database?

From these 3 questions, we can make some assumptions and determine which database service offering would be able to support the database. The storage is straightforward and influences the memory requirements. We typically estimate a healthy database in production would be able to cache between 5% and 15% of the data for smaller to mid-sized databases. As databases exceed 1TB in size, the percentages move down, but it is a similar calculation. The Environment Type and the number of users the database will need to support are leveraged in determining the number of vCPUs the database would use. If the environment is non-production, typically the server needs to be powerful enough to support functional testing which is a far lower resource requirement versus a production instance that executes SQL code as fast as possible. As the number of users increases, we can assume the number of transactions increases which would require more computing to support the database workload.

The benefit DBAs have today is that the majority of servers are virtual, and it is very easy to add or remove 2 vCPUs anytime you need a resource change. With that said, start small when allocating resources and once you get some of the historical performance data and can review the health of the database, you can easily make resource adjustments. Then over the course of the year, usually quarterly for me, we would review the capacity and health of all SQL Server instances to adjust the sizes of the servers and/or migrate databases to instances with more capacity.

As most DBAs want to automate everything, this process can be automated as well. Most organizations are designing everything as a service that includes data and our goal would be to make it as easy as possible for developers and the business to find a home for their data that meet their goals and objectives. The more successful they are, the more successful the company is and that is job security for us.

Leave a Reply

Want to join the discussion?Feel free to contribute!